In this post I summarize the paper “Simulating Training Dynamics to Reconstruct Training Data from Deep Neural Networks” by Hanling Tian et al. published at ICLR 2025. It’s about how to recover the training data of a neural network by simulating how it learns — I found it really interesting.

The goal of a learning algorithm is to find the final parameters of a deep neural network (DNN) given the dataset and the initial parameters:

\[\theta_f = \mathcal{A}_H(\mathcal{D}, \theta_0)\]where the final parameters are $\theta_f$, the initial parameters are $\theta_0$, the dataset is $\mathcal{D}$, and $\mathcal{A}$ denotes an algorithm with hyperparameters $H$.

The authors formulate the dataset reconstruction as an inverse problem given the initial and the final parameters of the DNN:

\[\mathcal{D} = \mathcal{A}_H^{-1}(\theta_f, \theta_0).\](Note that, the initial parameters are known when we start from a pretrained model.)

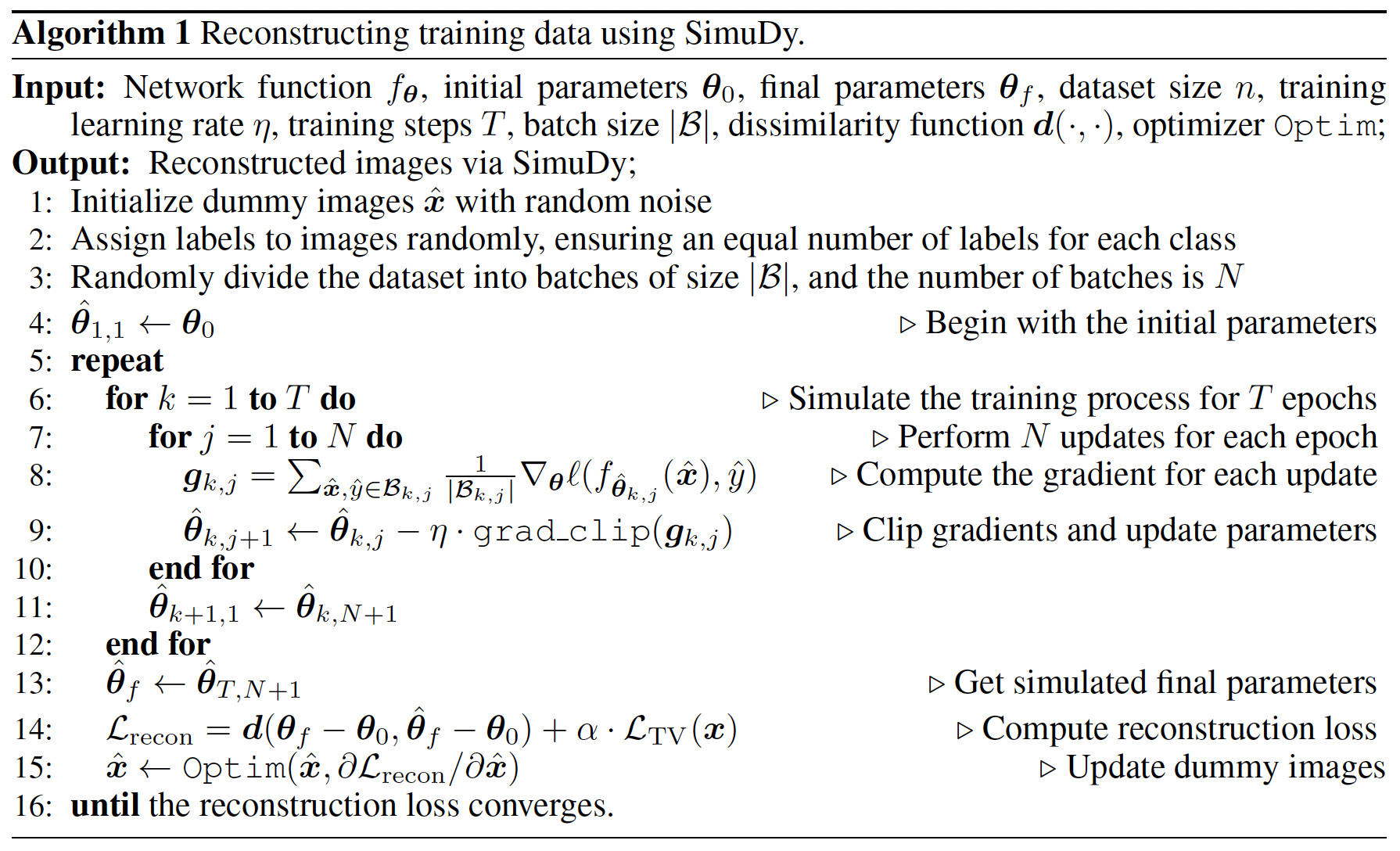

Algorithm

The paper considers images as data, and the goal is to learn the image samples that were used to produce the final parameters.

The algorithm starts by initializing the dummy images as noise, and by setting them as trainable parameters.

Step 1: The images are then used to train the model for a $T$ epochs, starting from $\theta_0$ and produce the final parameters $\hat{\theta}_f$.

Step 2: Afterward, the loss function is computed, and the images are updated based on their gradient with respect to the loss function.

Step 1 and Step 2 are repeated until convergence is reached.

Algorithm extracted from (Tian et al., 2025).

Algorithm extracted from (Tian et al., 2025).

Loss Function

The loss function comprises two parts:

it minimizes the distance (or dissimilarities) between the simulated final parameters $\hat{\theta}_f$ and the true final parameters $\theta_f$ using the cosine similarity (values between -1 and 1)

it minimizes the TV (total variation) of the reconstructed images. TV measures the distance between neighboring pixels, and it is used here to promote image smoothness

An important aspect of the algorithm is the usage of gradient clipping to prevent exploding gradient caused by noisy images.

Results

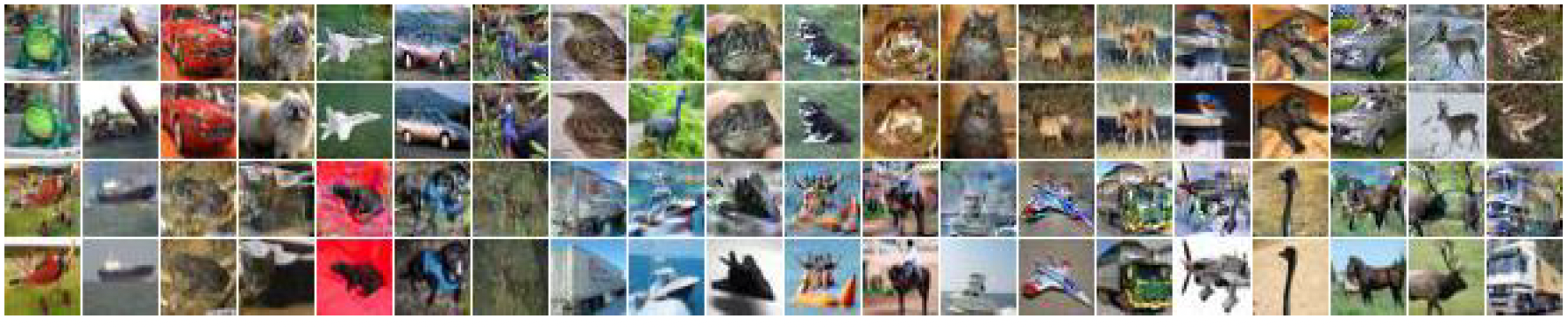

To measure the quality of the reconstructed dataset the SSIM is used as metric. The results show that the proposed method improves with respect to existing algorithms, but its performance declines as the dataset size increases (more challenging setup).

Top 40 images reconstructed from ResNet trained on 50 images and their corresponding nearest-neighbors (extracted from (Tian et al., 2025)).

Top 40 images reconstructed from ResNet trained on 50 images and their corresponding nearest-neighbors (extracted from (Tian et al., 2025)).

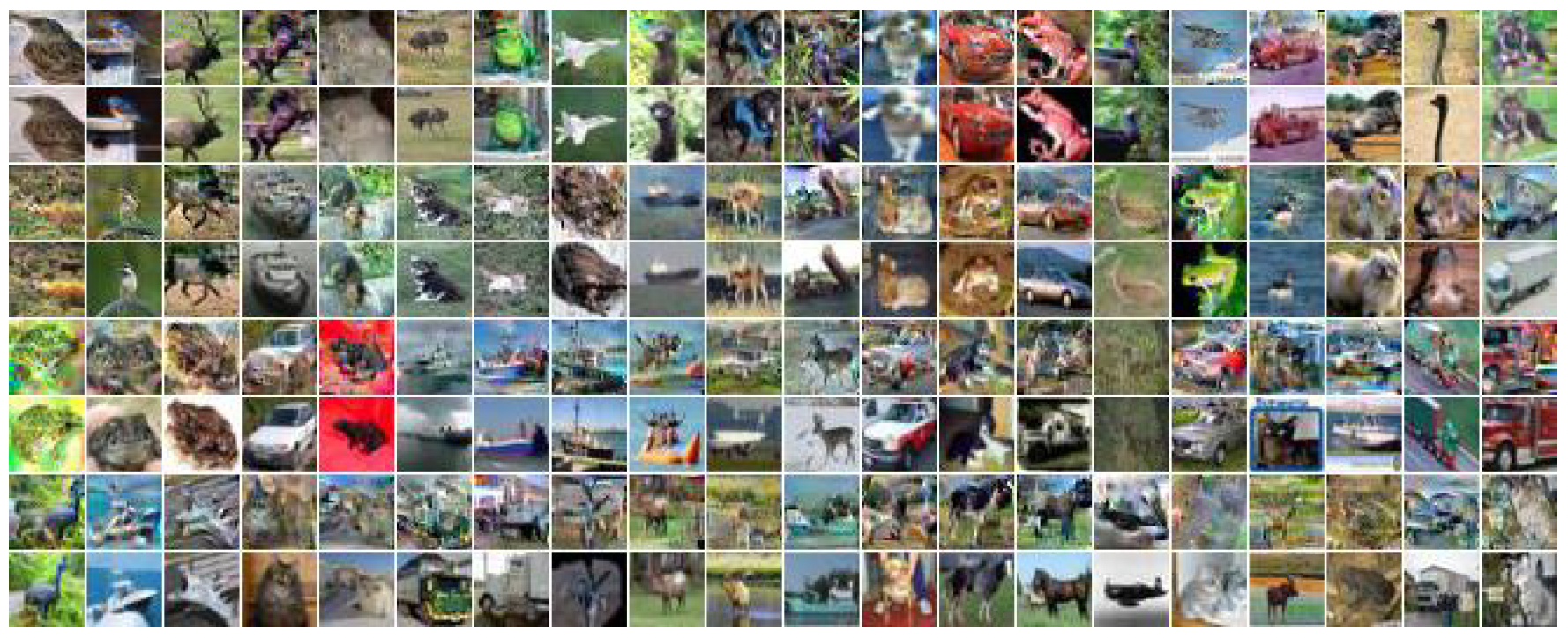

Top 80 images reconstructed from ResNet trained on 120 images and their corresponding nearest-neighbors (extracted from (Tian et al., 2025)).

Top 80 images reconstructed from ResNet trained on 120 images and their corresponding nearest-neighbors (extracted from (Tian et al., 2025)).

What we see is that as the dataset size increases the reconstruction quality decreases: $\text{SSIM}=0.1196$ (for dataset size 120) vs. $\text{SSIM}=0.1982$ (for dataset size 50).

Concluding thoughts ![]()

What I find fascinating about this paper is that it shows an intriguing aspect of deep learning: the possibility of reconstructing training data from model parameters alone. By framing the reconstruction as an inverse problem and simulating the training dynamics, the authors demonstrate that even highly complex models like ResNets can reveal sensitive information about the data they were trained on.

Despite the fact that the method’s performance drops as dataset size grows, it shows promising results for small datasets. This highlights exciting directions for research in both data privacy and interpretability, suggesting that understanding a model’s training trajectory can reveal far more than we would expect.

References

- Tian, H., Liu, Y., He, M., He, Z., Huang, Z., Yang, R., & Huang, X. (2025). Simulating Training Dynamics to Reconstruct Training Data from Deep Neural Networks. The Thirteenth International Conference on Learning Representations.